Table of contents

- 🚀 Introduction

- What is Kubernetes? Unveiling the Power of K8s 🛠️

- The Benefits of Embracing K8s 🌐

- Unveiling the Architecture: Navigating the Kubernetes Landscape 🏗️

- The Control Plane: Kubernetes' Command Center 🕹️

- Kubectl vs. Kubelet: Deciphering Their Roles 🔍

- The API Server: A Keystone in the Kubernetes Arch 🗝️

- Conclusion

🚀 Introduction

Welcome to the dynamic world of Kubernetes, where container orchestration meets scalability, resilience, and efficiency! In this introductory section, we'll embark on a journey to unravel the magic behind Kubernetes, exploring its significance in modern DevOps practices. From managing containers to automating deployment and scaling, Kubernetes has become the cornerstone of cloud-native applications. Let's dive into the basics and discover how Kubernetes empowers developers and operations teams to embrace a new era of efficiency and innovation. 🎉

What is Kubernetes? Unveiling the Power of K8s 🛠️

In the fast-paced world of DevOps, managing containerized applications efficiently is a paramount challenge. Enter Kubernetes, often abbreviated as K8s. But why the quirky "K8s" name? Well, it's a clever play on the eight characters between 'K' and 's' in "Kubernetes." Now that we've tackled the nomenclature, let's delve into what Kubernetes truly is.

At its core, Kubernetes is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Whether you're running Docker or any other container runtime, Kubernetes provides a unified API and a robust set of tools to simplify the orchestration of your containers, making the entire process scalable, resilient, and streamlined.

The Benefits of Embracing K8s 🌐

Why should you jump onto the Kubernetes bandwagon? The benefits are numerous. First and foremost, Kubernetes abstracts away the complexities of managing containers, allowing developers to focus on building and shipping applications. Some key advantages include:

Scalability: Kubernetes effortlessly scales your applications up or down based on demand, ensuring optimal performance.

Resilience: It automatically handles failures, ensuring that your applications remain available even if individual containers or nodes fail.

Portability: Kubernetes provides a consistent environment across development, testing, and production, fostering a seamless transition of applications.

Automation: With declarative configurations and automated deployment processes, Kubernetes simplifies and accelerates the delivery of applications.

Unveiling the Architecture: Navigating the Kubernetes Landscape 🏗️

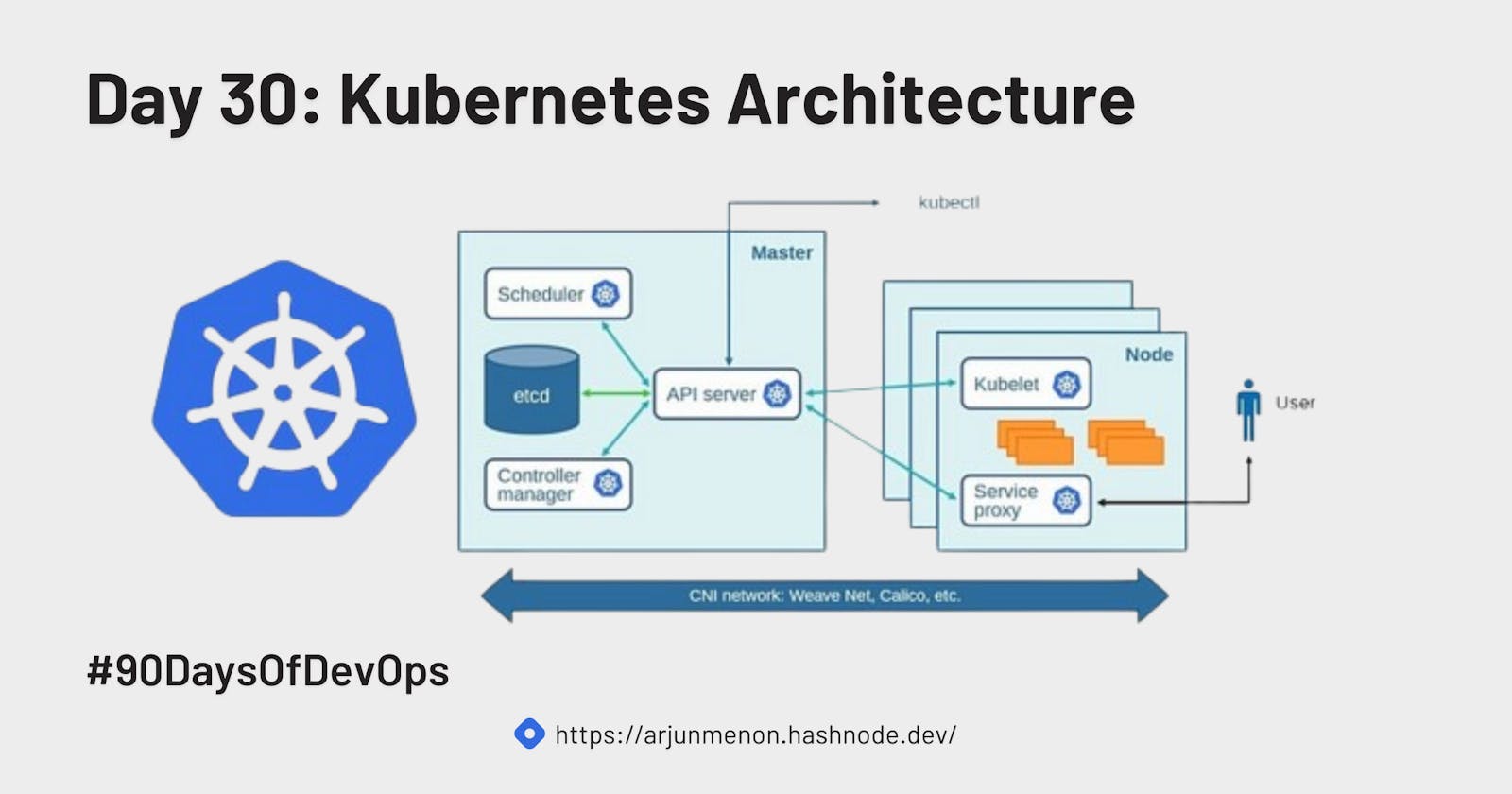

Understanding the architecture of Kubernetes is like holding a treasure map to navigate the dynamic landscape of container orchestration. Let's embark on a journey through the fundamental components that make up the robust architecture of Kubernetes.

Nodes: Where the Magic Happens 🌐

At the core of a Kubernetes cluster are nodes, the workhorses responsible for running your applications. There are two types of nodes:

Master Node: This is the control center, managing the overall state of the cluster. It houses essential components like the API server, scheduler, controller manager, and etcd.

Worker Nodes: These nodes host your containers. They run the applications and communicate with the master node to ensure the desired state is maintained.

Control Plane Components: The Puppet Masters 🕹️

The master node hosts crucial components collectively known as the Control Plane, orchestrating the actions within the cluster:

API Server: The gateway to Kubernetes, the API server exposes the Kubernetes API. All interactions, whether from users or other components, are processed and validated here.

etcd: A distributed key-value store acts as the memory of Kubernetes. It stores configuration data and the current state of the cluster. Consistency and fault-tolerance make etcd a critical component for reliability.

Scheduler: Think of the scheduler as a wise taskmaster. It's responsible for assigning work (containers) to the worker nodes based on factors like resource availability and constraints.

Controller Manager: This component ensures that the cluster remains in the desired state. It contains various controllers, each responsible for specific tasks, such as node and replication controllers.

Kubelet: The Node's Guardian 🛡️

On each worker node, the kubelet is the guardian. It communicates with the Control Plane and ensures that containers are running as expected on its node. It takes care of starting, stopping, and maintaining application containers.

Kube-Proxy: Networking Maestro 🌐

Kube-proxy is responsible for network communication within the cluster. It manages network rules, enabling seamless communication between applications across different nodes.

Pods: The Smallest Deployable Units 🚀

Pods are the fundamental units in Kubernetes, representing the smallest deployable entities. A pod can host one or more containers, tightly coupled and sharing the same network namespace. They facilitate seamless communication and resource sharing.

Services: Network Abstraction 🌐

Services provide a stable endpoint for accessing pods. They abstract away the complexity of dynamic pod IPs, ensuring that even if pods are rescheduled or scaled, the service remains accessible.

Volumes: Data Persistence 🔒

Volumes in Kubernetes provide persistent storage for containers. They decouple data from the container lifecycle, allowing data to persist even if the container is terminated.

The Control Plane: Kubernetes' Command Center 🕹️

At the heart of Kubernetes lies the Control Plane, the brain orchestrating the entire show. Comprising several components, including the API server, etcd, scheduler, and controller manager, the Control Plane dictates the desired state of your cluster and tirelessly works to maintain it.

API Server: The API server acts as the gateway for all communication within the cluster. It validates and processes requests, ensuring the coordination of all activities.

etcd: This distributed key-value store serves as Kubernetes' memory, storing the configuration data and the current state of the cluster.

Scheduler: The scheduler is responsible for assigning work (containers) to nodes based on resource availability and application requirements.

Controller Manager: This component oversees the various controllers responsible for maintaining the desired state, ensuring continuous reconciliation.

Kubectl vs. Kubelet: Deciphering Their Roles 🔍

In the Kubernetes realm, both kubectl and kubelet play critical roles, but they serve different purposes.

kubectl: Think of

kubectlas the command-line interface that allows you to interact with your Kubernetes cluster. You use it to deploy applications, inspect resources, and manage the overall cluster state.kubelet: On the other hand,

kubeletis an agent that runs on each node in the cluster. It communicates with the Control Plane and ensures that containers are running as expected on the node.

The API Server: A Keystone in the Kubernetes Arch 🗝️

The API server acts as the primary interface for all interactions within your Kubernetes cluster. It exposes the Kubernetes API, validating and processing requests, and serving as the entry point for kubectl and other components. Essentially, the API server is the linchpin that facilitates seamless communication and coordination across the entire Kubernetes system.

Conclusion

Kubernetes is more than just a buzzword in the DevOps landscape—it's a game-changer. From its clever nomenclature to the intricacies of its architecture, understanding Kubernetes opens up a world of possibilities for efficiently managing containerized applications. So, whether you're a seasoned DevOps engineer or a curious newcomer, embracing Kubernetes could be the key to unlocking a new level of agility and scalability in your development workflow.

Let's connect on LinkedIn.

Checkout my GitHub profile.